Sleep disturbances, double vision, writer’s cramp. As some of you might recall, I was not fully conscious during our Young Investigator’s meeting two weeks ago, spending most of the time in a haze either hacking random commands into my laptop with sweaty palms or desperately trying to communicate with my neighbor in Unix, Perl or Loglan. Ever since then, people have remarked that I have lost weight and that I haven’t smiled since. What has gotten a hold of me? It all started out with a small, innocent hard drive that made its way from Antwerp to Kiel.

What’s the diagnosis, doc? I was soon diagnosed with a common condition in field of genetic science – exome shock. This little hard drive that Sarah Weckhuysen transferred from Antwerp to Kiel contained the RES exomes in VCF4 format. For the untrained eye, VCF4 is pure poison. You open such a file and you are blown away by a innumerable amount of lines starting with “##”. At some point down the file, you see some information seemingly resembling some useful genetic information (“Chr1”, “rs…”, “A”, “T”). To give you some idea about my history of present illness, I am a poorly prepared exome end user, a plain clinician used to beautifully annotated variant files, filtered for in-house controls combined from various calling algorithms and elegantly trimmed down to a manageable list that can be viewed in Excel using a simple filter function until you have narrowed down the field to the deleterious, high-coverage, not-in-1000-Genomes variant that is likely to cause your disease. I thought it was that simple.

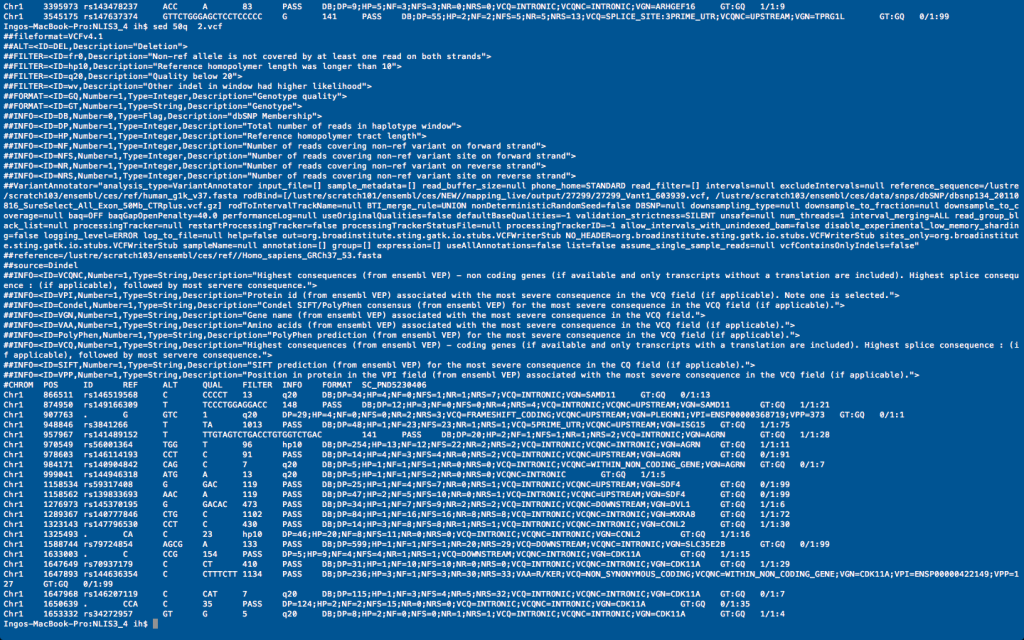

Oh such poetry. The first 50 rows of a VCF4 file printed in the command line. Unix afficiandos may realise that I have used the “sed” command for this, which hopefully will gain me some points for style. On a more human level, a little command line programming goes a long way and will help to convert files like this into a format that we can easily handle.

What is the Matrix? To some extent, I realized how Neo must have felt when waking up in the real world after taking the red pill. I was looking at the real thing, long list of variant scrolling down your screen for hours and hours. In the data itself, there was little indication what is important and what is not. This was exome data, which is already highly processed through forces beyond your understanding. But being burdened with this data is already more than I could handle. What usually happens with this data is a process called annotation. Many people use Kai Wang’s annovar to supplement convert this data file into a format that provides information for each variant regarding its frequency in controls, its position in the gene, the amino acid change caused by the variant and its possible functional consequences. Most genome centers then also add their secret sauce to it, the filtering for in house controls, variants that are consistently called in all individuals and which either represent artefacts or common variants that don’t have names yet. And don’t underestimate this last filtering step; it may bring down the list of variants from 30K to 5K or less. The server the VIB in Antwerp is currently busy running a particularly comprehensive type of annotation for the RES trios, converting the Matrix files into even more complex fully annotated 400GB files for complete patient-parent trios that we use in EuroEPINOMICS-RES to identify possible causative variants in epileptic encephalopathies. Therefore, I could always lean back and let people do their job, taking the blue pill to get back into the dreamy state I was before, waiting for my nicely prepared Excel variant files. But I had seen the Matrix and it is hard to let go. I felt that this was something that I could eventually understand.

There is no spoon, there is no pipeline. Computer people usually refer to semi-automated processes as pipelines. Take the poisonous VCF4 file, stick it into a pipeline and you will have a nicely annotated file if you apply a rigorous panel of filters. This is how it usually works. I refer to computer people rather than to bioinformaticians on purpose. Many real bioinformaticians may be slightly appalled when asked to help you with this process. Because there is nothing really magic about this, you just have to apply filter by filter and don’t need any particular knowledge on computational algorithms, prediction programs, stats or probabilities. It is simply the process of reducing data. Let’s take copy number analysis as an example. Compared to exome data, it has a completely different dimension of complexity: signal intensities of markers are meshed, normalized and pumped into a storage-friendly binary file (Excel cannot open a file like this). Then an algorithm takes these intensities and converts them to predicted gains and losses using a hidden Markov model. In contrast, exome data in VCF4 already has all the information that you need. All we have to do is to take each variant, and get the corresponding information from other tables. No stats involved. For each variant individually, we could easily do this manually, but the problem is the large number of search operations to be performed.

We all have to become bioinformaticians or surrender. If you define bioinformatics as the distant field of science that does these filtering steps for you, you might want to reconsider. There is much to be gained from learning a little Unix or Perl that will help you to easily perform the sorting and filtering operation you need. The book Unix and Perl to the rescue is a nice little introduction for life scientists and give you a basic idea on how you can apply simple steps yourself that help you sift through the data. On a more general level, we are faced with the choice to either call for a bioinformatician each time we run into problems or try to reclaim some ground by moving closer to the data ourselves. By becoming little bioinformaticians and losing the fear of big data, we might be able to weather the flood of data that we are expecting in the future. Otherwise, we might end up in a strange situation where we apply for research funds only to pass these funds on to genome centers, which in turn produce data that we don’t understand any more.

BAM, here is your data – Curing exome shock. There is a funny truth to exome data. You don’t really need a genome center any more after the VCF4 is produced. The amount of data left is completely manageable on a 5-year old desktop computer. One approach for curing exome shock is to feel how the data moves and wiggles in your hands if you handle it. Trial and error applying different filters, selection strategies etc. can help you develop a gut feeling for exomes, and a little scripting using Unix/Perl/Python might go a long way. Sifting through exome data can be particularly rewarding since you can always test the success of your strategies. Exome data comes with BAM files, large binary annotation/mapping files that can be queried using the Integrated Genomics Viewer IGV. These files show you the different parts and reads at a particular position within the exome. I have felt that getting closer to the data is probably my cure for exome shock. Having said this, I would like to leave you with another Matrix quote (Morpheus to Neo):

Sooner or later you will realize that there is a difference between knowing the path and walking the path.